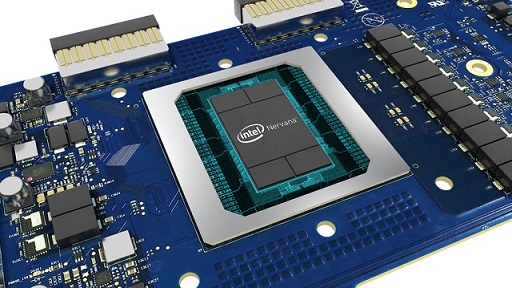

Intel with collaboration of Facebook brings the Nervana NNP to market. According to Intel (the chipmaker company), the architecture behind the new AI-focused processor is known by Lake Crest.

Intel recently revealed the Nervana Neural Network Processor (NNP), previously known as “Lake Crest,” a chip that took three years to complete and it’s designed specifically for AI and deep learning.

Intel explains about its unique architecture and shares the news that Facebook has been a close collaborator as it put efforts together to bring the Nervana NNP into current market.

Naveen Rao, corporate VP of Intel’s Artificial Intelligence Products Group said,

“While there are platforms available for deep learning applications, this is the first of its kind, built from the ground up for AI, that’s commercially available.”

It’s rare for Intel to deliver a whole new class of products, he said, so the Nervana NNP family demonstrates Intel’s commitment to the AI space.

AI is revolutionizing computing, turning a computer into a “data inference machine,” Rao said. “We’re going to look back in 10 years and see that this was the inflection point.”

Intel strategy is to deliver silicon to a couple close collaborators this year, including Facebook. Intel collaborates directly with large customers like Facebook to settle on the rights set of features they require, Rao explained.

Next year, customers will be capable to build solutions and install them via the Nervana Cloud, a platform-as-a-service (PaaS) by Nervana technology. Instead, they could employ the Nervana Deep Learning appliance.

Intel CEO Brian Krzanich said

Also Read: Intel just launched its 8th generation of U-Series

“The Nervana NNP will enable companies to develop entirely new classes of AI applications that maximize the amount of data processed and enable customers to find greater insights–transforming their businesses.”

For instance, social media companies like Facebook will be talented to bring more personalized and modified experiences to users and more targeted reach to advertisers.

He also mentioned other use cases such as early diagnostic tools in the health care industry, advancement in weather predictions and improvements in autonomous driving.

With numerous generations of Nervana NNP products in the list, Intel says it is on path to meet or even exceed its 2016 gaol to get a 100-fold add to in deep learning training performance by 2020. Intel plans on reveling the Nervana products on an annual tempo or perhaps sooner.

Nervana NNP has three unique architectural characteristics which offer the flexibility to hold up deep learning primitives while making most important hardware components as competent and resourceful as possible.

With the addition of the Nervana NNP, Intel now offers chips for a full spectrum of AI use cases, Rao said. It complements Intel’s other products used for AI applications, including Xeon Scalable processors and FPGAs.

“We look at this as a portfolio approach, and we’re uniquely positioned to take that approach,” he said. “We can really find the best solution for our customer, not just a one-size-fits-all kind of model.”

For example, if a new customer were at the beginning of their “AI journey,” Rao said,

“We have the tools to get them up and running quickly on a CPU, which they probably already have.” As their needs grow, “we have that growth path for them,” he continued, calling the Nervana NNP the “ultimate high performance solution” for deep learning.